It took a woman seven months of bouncing between specialists and imaging appointments before she could even start radiotherapy for the lump in her breast.

This is not fiction, it is a harrowing tale of an inefficient healthcare system.

The seven months were not a medical necessity—it was a lapse in coordination. Looks like the only “solution” to overcome this at the moment is to fit everyone in one institution, which feels like a 19th-century remedy for a modern problem, says Grahame.

With FHIR, Grahame Grieve eventually intends to push the entire healthcare system to address gaps in care that truly affect patients.

Grahame Grieve - The Inventor of FHIR

Grahame Grieve is often known as the “Father of FHIR”. He is a pioneering figure in healthcare interoperability and the principal architect behind FHIR (Fast Healthcare Interoperability Resources). By bridging the gap between clinical needs and software design, Grahame’s work has revolutionized how health data is exchanged, enabling information sharing across diverse systems and ultimately improving patient care worldwide.

Let’s expand briefly on interoperability and FHIR.

In healthcare, interoperability means that different healthcare systems and tools (like EHRs) can talk to each other and share information. This ensures that patients’ medical histories, test results, and treatment plans stay consistent, accurate, and accessible to the right people—no matter which clinic, hospital, or system they use.

Your next question would be what is FHIR’s role in this?

FHIR is a healthcare standard that enables the uniform exchange of health information between different systems. If you’re new to this world, we have a beginner’s guide to FHIR, check it out!

How did FHIR become the way it is today?

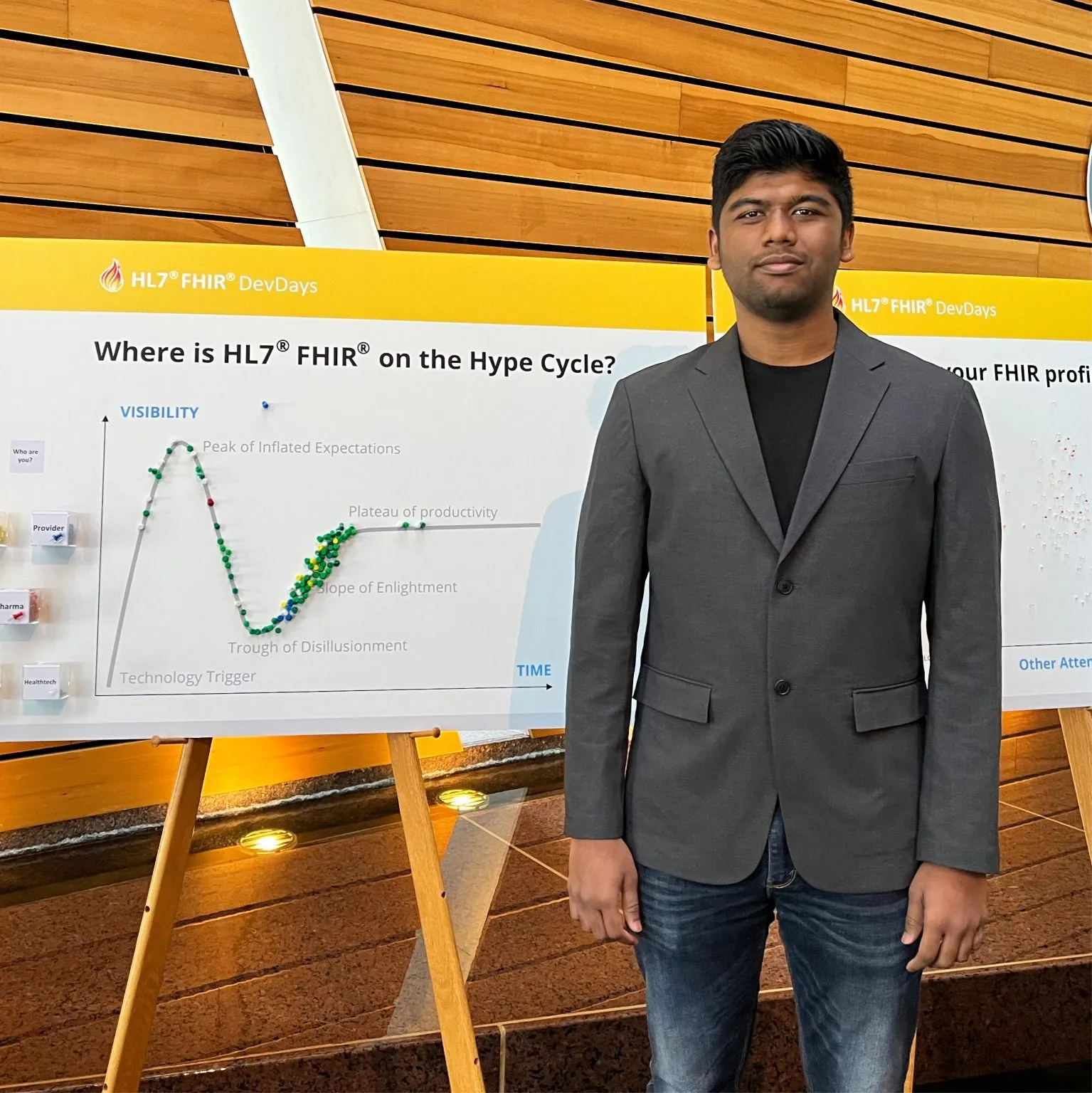

FHIR is the most popular healthcare IT standard today. As Gartner’s “hype curve” suggests, it’s normal human behavior to create as much hype as possible around something new.

This is Medblocks founder, Sidharth Ramesh, at the FHIR DevDays. The board behind him showcases a voting process carried out by the organizers to put a pin on “Where is HL7 FHIR on the Hype Cycle?”.

This is Medblocks founder, Sidharth Ramesh, at the FHIR DevDays. The board behind him showcases a voting process carried out by the organizers to put a pin on “Where is HL7 FHIR on the Hype Cycle?”.

According to Grahame, there are 3 milestones that stand out which made FHIR so popular.

The most significant technical milestone was when U.S. vendors decided to use FHIR for their required patient-and-provider-facing APIs to provide access to healthcare data.

HL7 v2 was leading in the early stages, but HL7 specialists needed to know how to work with a domain-specific tech stack (MLLP). They were also extremely expensive and not easily available plus the specification license had to be paid upfront. Due to all these factors, convincing the HL7 board to adopt an open-source license was a pivotal moment.

How was traditional HL7 different from FHIR? Read more about this here.

Lastly, although not a huge technical step, Apple adopting FHIR without customizing it was massive in terms of public perception.

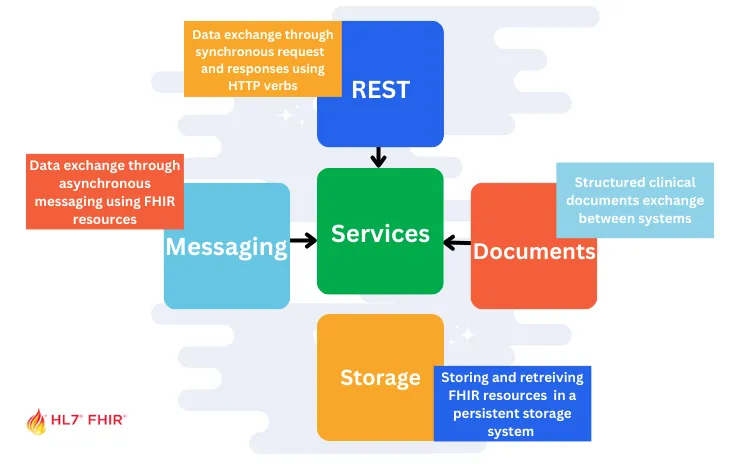

Story Behind FHIR Paradigms

In the decade that Grahame worked on data interoperability for vendors, customers, or the government, he noticed something prominent. The cost to transform data from one paradigm to another was pretty steep. Relying on one “vertical stack” standard for a single paradigm was dragging the industry down.

So he did what any other person (read: genius) would do. He started from scratch and cherry-picked the best ideas from established solutions like V2, DICOM, CDA, openEHR, etc., with the main goal of reusing the same content across multiple paradigms without having to change it each time.

Grahame comments that it hasn’t been perfect—mainly because text and data can behave differently in each paradigm. Still, at least the data itself can be consistent. His focus right now is to build the right tools and develop a well-integrated “terminology ecosystem” (covering SNOMED CT, LOINC, and countless other standards) to tap into all that information.

Grahame comments that it hasn’t been perfect—mainly because text and data can behave differently in each paradigm. Still, at least the data itself can be consistent. His focus right now is to build the right tools and develop a well-integrated “terminology ecosystem” (covering SNOMED CT, LOINC, and countless other standards) to tap into all that information.

What is FHIR’s 80-20 rule?

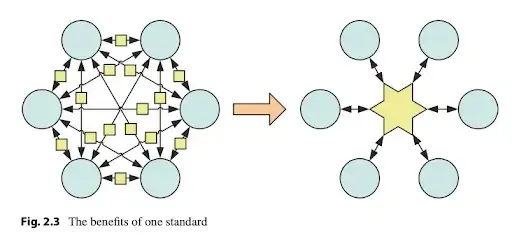

Grahame’s book — The Principles of Health Interoperability — has a really interesting diagram.

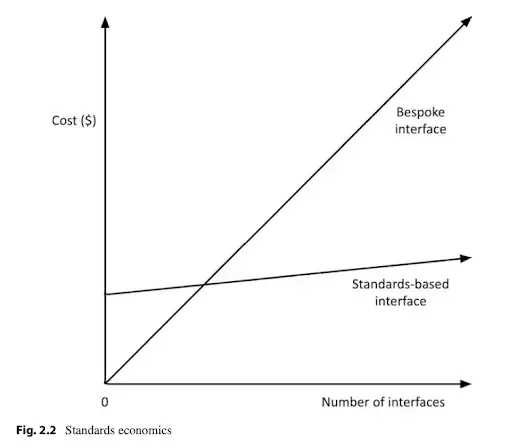

In his book, he also talks about how the scales of the cost and the number of interfaces with a standard interface versus a bespoke interface changes as you have more and more interfaces.

In his book, he also talks about how the scales of the cost and the number of interfaces with a standard interface versus a bespoke interface changes as you have more and more interfaces.

Keeping this in mind, let’s take a look at the reasoning behind FHIR going with the 80-20 rule.

Keeping this in mind, let’s take a look at the reasoning behind FHIR going with the 80-20 rule.

FHIR’s 80-20 rule is a guiding principle aimed at keeping the core FHIR standard simple and widely applicable, while still allowing flexibility for more specialized needs. Contrary to what some might think, Grahame explains that it’s not about capturing exactly 80% of healthcare data or what 80% of systems do. Instead, it’s about focusing on the elements and workflows that most people agree on and use regularly (the “80%”), and then providing a robust extension framework for the rare or highly specialized scenarios (the “20%”).

He continues to say that if every unique or “esoteric” requirement made it into the core standard, FHIR would become so huge and unwieldy that nobody could reasonably implement it. Core FHIR resources capture what most users and systems typically need. Keeping the standard straightforward for these common needs allows developers and organizations to implement it more quickly and consistently.

Grahame gives an example of diagnostic reporting, which is largely uniform in how they share information. Here, nearly 100% of the common features land in the “80%.” Meanwhile, areas like care planning can differ wildly across organizations. For these specialized workflows, FHIR allows custom extensions and profiles. This way, the standard doesn’t balloon with features only one or two organizations might use.

The 80-20 rule in FHIR ensures the standard strikes the right balance between broad adoption and customizability.

What Happens When Everyone Implements FHIR Differently?

FHIR is definitely easy to get started with but when everyone implements FHIR in their own unique way, interoperability becomes a struggle—even for the most basic tasks like recording vital signs. In theory, standards exist to keep data consistent (for example, using one agreed-upon code and unit for blood pressure), but real-world factors such as local rules, business needs, or simple lack of awareness can derail that consistency.

Some organizations choose to ignore established conventions because they believe they “know better,” while others might not even realize a standard approach exists. As a result, data that should flow seamlessly among systems can become scattered and confusing, explains Grahame.

It essentially states that FHIR’s adaptability is both its greatest strength and a source of challenges. It doesn’t impose a one-size-fits-all approach, and this flexibility allows organizations to address their specific needs. However, assuming uniformity across FHIR implementations is a mistake that can lead to integration delays and interoperability issues. Success with FHIR depends on understanding these differences and planning accordingly.

How is Versioning done in FHIR?

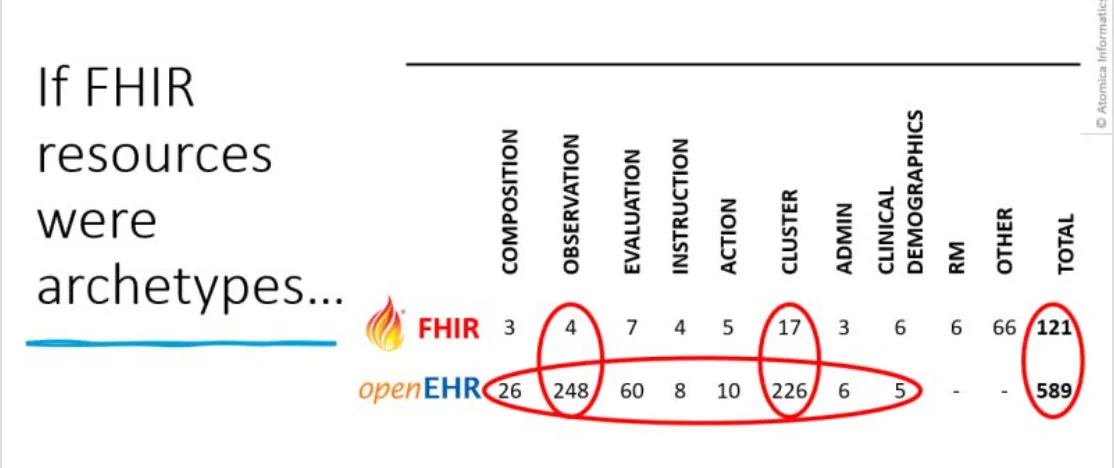

FHIR handles versioning of its API and resource content together, rather than completely separating them as opposed to the openEHR community. For context, Heather Leslie’s post highlights that there are currently hundreds of semantic data models within openEHR that correspond to a FHIR observation.

When HL7 publishes a new version of FHIR (e.g., R4 or R5), it includes: Core API Rules – these govern the RESTful interactions (e.g., how to GET, POST, PUT, or DELETE resources), along with search parameters and other implementation rules – and Resource Definitions.

When HL7 publishes a new version of FHIR (e.g., R4 or R5), it includes: Core API Rules – these govern the RESTful interactions (e.g., how to GET, POST, PUT, or DELETE resources), along with search parameters and other implementation rules – and Resource Definitions.

According to Grahame it’s done so because a small change to a resource can indirectly affect how clients and servers interact, it’s difficult to entirely separate the versioning of the API from the versioning of the data models.

He explains that some generic FHIR servers (like HAPI, Smile CDR, and commercial platforms from Google or Microsoft) do support loading resource definitions dynamically. This means you can, in principle, update data models without completely rewriting server logic. However, once you move beyond simple data storage and start doing meaningful processing—business rules, clinical decision support, and so on—developers often find it easier to reference resource elements directly in their code.

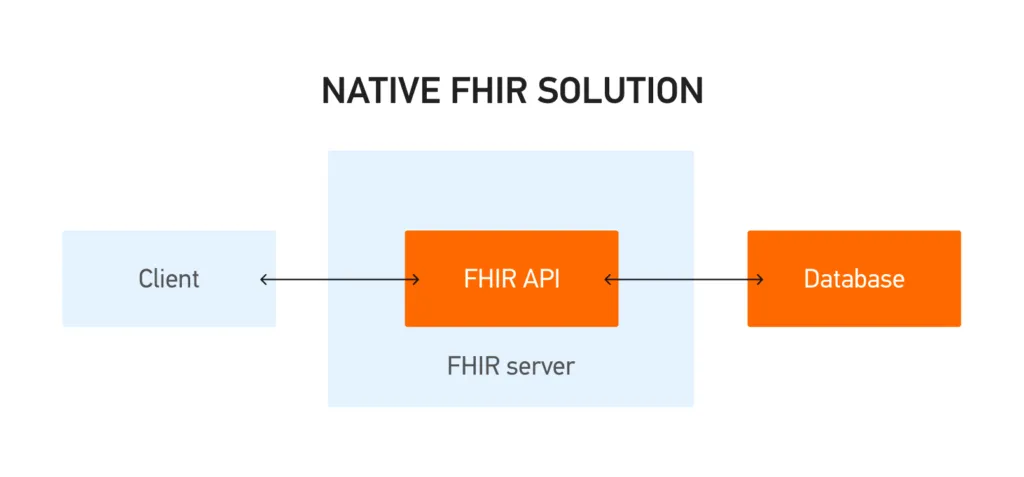

Using FHIR-native for Storage

A pattern that we are seeing is that a lot of organizations are choosing to store healthcare data natively in FHIR. At first glance, this seems like a natural extension of using FHIR for interoperability: if you already rely on FHIR for exchanging data across systems, why not also standardize your internal storage on the same format?

Grahame explains that FHIR was originally designed for data exchange, not for internal database structures. Its resources are intentionally organized in a way that makes them flexible and easy to share—but not necessarily optimal for fast, complex queries or large-scale transactional workloads.

If your system revolves heavily around exchanging information across multiple stakeholders—where new data elements or fields need to be added on the fly—then a FHIR-native approach can be very attractive. You gain immediate interoperability, and changes like adding new fields or resource elements can happen almost instantly, without re-architecting your entire database. This is especially valuable in environments where requirements change rapidly or where you expect frequent new data types.

For many, the real-world solution lies in a hybrid approach, according to Grahame. In this scenario, raw data is initially funneled into a FHIR data lake, ensuring a consistent, interoperable foundation.

For many, the real-world solution lies in a hybrid approach, according to Grahame. In this scenario, raw data is initially funneled into a FHIR data lake, ensuring a consistent, interoperable foundation.

Grahame also notes that major cloud providers (like Google, AWS, and Microsoft) are already heavily optimizing their FHIR data stores. Some healthcare implementations in Africa even run on a single FHIR server, demonstrating that well-tuned FHIR-based systems can scale surprisingly far. Ultimately, whether to use FHIR as your primary data store depends on your application’s specific needs, the frequency of data changes, and your performance requirements.

Watch the complete interview with Grahame Grieve at our Digital Health Hackers Podcast here:

Final Thoughts

Looking ahead, Grahame realizes that FHIR may eventually become as ubiquitous as older standards—and could face the same criticisms, too. Yet this cycle of improvement and dissatisfaction is more social than purely technical.

For now, the key is building solid, open-source validation tools and fostering collaboration so that different implementations converge, rather than fragment, leading us closer to true interoperability.